As 2026 announces

itself as the year where “the hombres will be separated from the niños” , I’d

like to look back at the evolution in GenAI and present a few predictions on it

for the next two to three years.

When

ChatGPT was launched in November 2022; it immediately raised high hopes for a

new wave in machine learning, making the interaction with neural networks more

intuitive using natural language. And as time progressed, the models were

trained on ever increasing data volumes as the incumbents saw this as the only

way to win the platform war and telling their investors about first mover

advantage to get funds at surreal valuations. Now they are finding out two

things. One: size is not the distinguishing factor in the platform competition.

It’s about purpose built and well curated models where the competition is

heading and two: there is no platform competition because the platforms were

already there: Microsoft integrating ChatGPT in Office365 and Google doing the

same with Gemini in its product suite.

Other

platforms like Meta, shopping platforms like Amazon, Ali Baba or banking

applications, either promote home grown LLMs or use one or more models among

the hundreds of thousands open access models they can find on Hugging Face.

Nevertheless,

there is one aspect of platform economics emerging, the creation of exit

barriers. Once you’ve chosen for a platform to integrate in your business

applications the SDKs and the models used and improved by RAG and other means

(which we are working on) will increase switching costs.

The first

three years in GenAI were a lot about overpromising and under delivering. I am

not checking if Gartner already talks about the trough of disillusionment but I

have met a few clients where disillusion has set in. Cases of organisations

reducing their after sales service staff and replacing them with chatbots had

to reverse their decision. We all know of lawyers producing phony legal

precedents are being fined. Not langer

than a couple of weeks ago, a judge in California has fined plaintiffs’ law firm

Hagens Berman, one of its partners, and another lawyer a combined $13,000 for

the “misuse” of artificial intelligence in several court filings in a lawsuit

against the parent company of adult content social media site OnlyFans.

Much of the

GenAI offers are “inside out” like when a top official of ChatGPT bluntly

stated that the users will need to learn what they can do with their product.

To use Lee James and Warren Schirtzinger’s concept of the marketing chasm,

later popularised by Geoffrey Moore’s terminology, we are very much pleasing

the techies but the pragmatists are left in the cold and are waiting on the

other side of the chasm for a useful application to cater to their needs. That doesn’t absolve the pragmatists from the

obligation to examine the impact of GenAI on their organisation, their business

processes and their talent management. But today, little efforts are made to

support them in that endeavour.

Forget about network effects, it’s all about trusted data

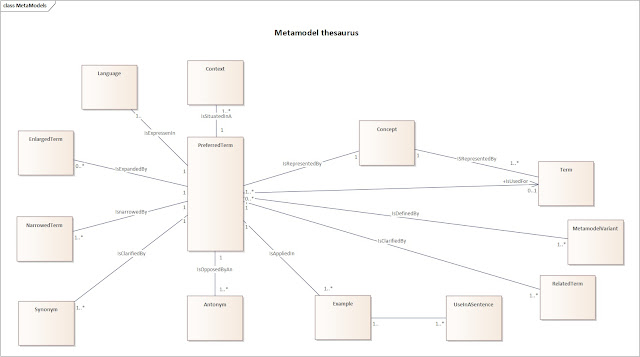

The real

battle will be fought on the data terrain. And this is where our knowledge

modelling methodology comes in play. Its output combines the strengths of

“older” machine learning techniques with state of the art LLM technologies and

prepares domain knowledge for reliable use in a well-defined, professional

context.

It is also

about scope management. There is a simple, over and over empirically conformed

law that illustrates the correlation between consistency and scope of

information: the smaller the scope, the higher the consistency.

Let me give

you two examples to illustrate this thesis.

In the

accounting domain the concept of cash flow is unequivocally defined as the

money that flows in and out of a business and is measured from different

perspectives: operations, investing and financing. Cash flow is an important

metric to determine the value of a business.

But a business valuation in itself is a more fuzzy concept composed of various,

sometimes contradicting metrics as not only quantitative metrics like free cash

flow, debt to equity ratio, price/earnings ratio etc… are under scrutiny. There

are a lot of qualitative or more fuzzy metrics and evaluations like risk,

customer loyalty, market position,

growth potential and brand preference that are equally important to this

concept.

In the

supply chain domain, Solventure’s Bram De Smet pointed out in his book “SupplyChain Strategy and Financial Metrics” that crisply defined metrics like cash,

cost and service level become a dynamic and fluid mix as a function of your

business strategy. Cash, cost and service level may be opposing forces in

realising an optimum outcome to support the strategic direction. The author

leverages Treacy & Wiersema’s model of value disciplines, showing how

product leadership, customer intimacy, or operational excellence each imply

different preferred positions on the service–cost–cash triangle.

Crawford

& Matthews’ five value drivers (price, access, service, product,

experience) are used to further link market strategy choices to supply chain

and financial design.

What we need in GenAI is a method, models and software that support both the low level, crisp and well defined knowledge building blocks while producing meaningful concepts that combine these building blocks in a reliable way. Imagine on top of that, we can implement de Bono’s lateral thinking as a proxy for creativity…

The S-curve, where are we in GenAI?

GenAI technology

started out as an expensive platform war with ever increasing investments in

GPUs, data volumes and training efforts. But contrary to the classic S-curve

evolution, it was rapidly widely adopted as the major players were seeding the market via

free, universally available chatbots in a browser or an app, Microsoft making a

move with CoPilot and Google introducing Gemini in its Google Search engine.

True to the S-curve’s pattern, improvement is slow as the fundamental concepts

are being figured out. This is where we are today.

When the

period of rapid innovation and massive adoption will follow is hard to predict

but there are signs it won’t take too long. I keep seeing more and more

colleagues investigating real life use cases instead of considering it as a

glorified search engine or an evolved autocomplete. I think before 2028 we will

see some real killer apps like streaming music and video, E-Commerce and social

media were for the Internet era.

We hope the

application we are working on, will one day be part of GenAI’s killer apps.