Introduction

In this article I will define what data governance (DG) for Generative Artificial Intelligence (GenAI) is and how it differs from DG as we have known it for decades in the world of transaction systems (OLTP) and analytical systems (OLAP and Data Mining).

In a second post, I will make the case for DG based on the use case at hand and illustrate a few GenAI DG use cases that are feasible and fitting the patterns and the framework.

Die “Umwertung aller Werten”

The German philosopher Friedrich Nietzsche postulated that all existing values should be discarded and replaced by values that -up to now- were considered unwanted.

This is what comes to mind when I examine some GenAI use cases and look at the widely accepted data governance policies, rules and practices.

Here are the old values that will be replaced:

• Establish data standards;

• The data model as a contract;

• Data glossary and data lineage, the universal truths;

• Data quality, data consistency and data security enforcement;

• Data stewardship based on a subject area.

Establish data standards

As the DAMA DM BOK states: Data standards and guidelines include naming standards, requirement specification standards, data modelling standards, database design standards, architecture standards, and procedural standards for each data management function.

This approach couldn’t be further away from DG for GenAI. Data standards are mostly about “spelling” which has very low impact on semantics. The syntactical aspects of data standards are more in the realm of tagging where subject matter experts provide standardised meaning to various syntactical expressions. So we can have tagging standards for supervised learning, but even those can depend on “the eye of the beholder”, i.e. the use case.

OK, we can have discussions about which language model and vector database is the best fit for the use case at hand but it will be a continued trial and error process before we have optimised the infrastructure and it certainly won’t be a general recommendation for all use cases.

And as for the requirement specification standards, as long as they don’t kill the creativity needed to deal with GenAI, I’ll give them a pass, since this is not always a linear process to identify business needs for information and data. The greatest value in GenAI lies in discovering answers to questions you didn’t dream of asking.

This principle works fine for transaction systems and classic Business Intelligence data architectures where a star schema or a data vault models the world view of the stakeholders. The only contract is the aforementioned tagging and metadata specifications to make sure the data are exploitable.

Data glossary and data lineage, universal truths?

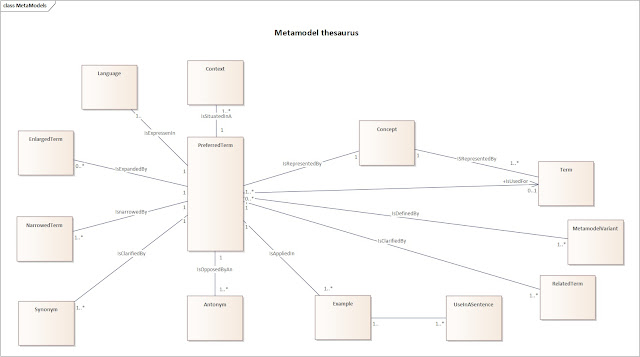

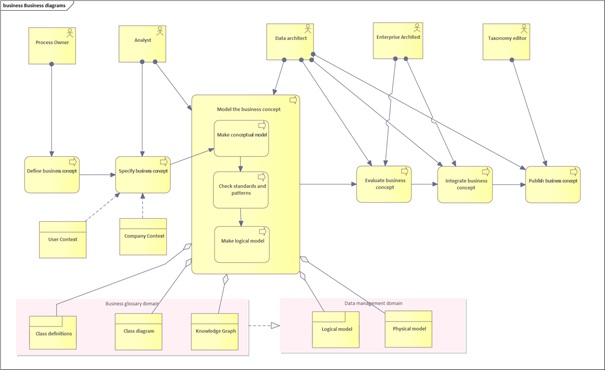

No longer. The use case context will determine the glossary and the lineage if there are intermediate steps involved before the data are accessible. Definitions may change as a function of context as well as data transformations to prepare them for the task at hand.

In old school data governance policies, data quality (DQ) is first about complying with specs and only then does “fit for purpose” comes in as the deciding criterion as I described in Business Analysis for Business Intelligence(1):

Data quality for BI purpose is defined and gauged with reference to fitness for purpose as defined by the analytical use of the data and complying with three levels of data quality as defined by:

[Level 1] database administrators

[Level 2] data warehouse architects

[Level 3] business intelligence analysts

On level 1, data quality is narrowed down to data integrity or the degree to which the attributes of an instance describe the instance accurately and whether the attributes are valid, i.e. comply with defined ranges or definitions managed by the business users. This definition remains very close to the transaction view.

On level 2, data quality is expressed as the percentage completeness and correctness of the analytical perspectives. In other words, to what degree is each dimension, each fact table complete enough to produce significant information for analytical purpose? Issues like sparsity and spreads in the data values are harder to tackle. Timeliness and consistency need to be controlled and managed on the data warehouse level.

On level 3, data quality is the measure in which the available data are capable of adequately answering the business questions. Some use the criterion of accessibility with regards to the usability and clarity of the data. Although this seems a somewhat vague definition, it is most relevant to anyone with some analytical mileage on his odometer. I remember a vast data mining project in a mail order company producing the following astonishing result: 99.9% of all dresses sold were bought by women!

In GenAI, we can pay few attention to the aforementioned level 1 while emphasizing the higher level aspects of data quality. And there, the true challenge lies in testing the validity of three interacting aspects of GenAI data: quality, quantity and density. As mentioned above: quality in the sense of “fit-for-use-case” reducing bias and detecting trustworthy sources, quantity by guaranteeing sufficient data to include all -expected and non-expected- patterns and finally density: to make sure the language model can deliver meaningful proximity measures between the concepts in the data set.

A business data steward, according to DAMA is a knowledge worker and business leader recognized as a subject matter expert who is assigned accountability for the data specifications and data quality of specifically assigned business entities, subject areas or databases, who will: (…)

4. Ensure the validity and relevance of assigned data model subject areas

5. Define and maintain data quality requirements and business rules for assigned data attributes.

It is clear that this definition needs adjustments. Here is my concept of a data steward for GenAI data:

It is, of course, a knowledge worker who is familiar with the use case that the GenAI data set needs to satisfy. This may be a single subject matter expert (SME) but in the majority of the cases he or she will be the coach and integrator of several SMEs to grasp the complexity of the data set under scrutiny. He or she will be more of a data quality gauge than a DQ prescriber and, together with the analysts and language model builders will take measures to enhance the quality of the output rather than investing too much effort in the input. Let me explain this before any misconceptions occur. The SME asks the system questions to which he knows the answer, checks the output and uses RAG to improve the answer. If he detects a certain substandard conciseness in all the answers he may work on the chunking size of the input, but that is without changing the input itself. Meanwhile some developers are working on automated feedback learning loops that will improve the performance of the SME, as you can imagine coming up with all sorts of questions and evaluating the answers is a time consuming task.

In conclusion

Today, GenAI is more about enablement than control. It prioritises the creative use of data while ensuring ethical and transparent use of it. Especially in Explainable Artificial Intelligence (XAI) this approach is enabled in full. I refer to a previous blog post on this subject.

Since unstructured data like documents and images are in scope, a more flexible and adaptive metadata management is key. AI is now itself being used to monitor and implement data policies. Tools like Alation, Ataccama and Alex Solutions have done groundbreaking work in this area and Microsoft’s Purview is -as always- catching up. New challenges emerge: ensuring quality and accuracy is not always feasible with images and integrating data from diverse sources and in diverse unstructured formats is also a challenge.

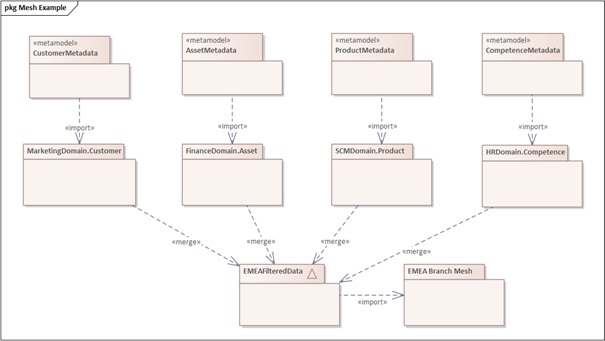

The more we are developing new use cases for GenAI, the more we experience a universal law: data governance for GenAI is use case based as we prove in the next blogpost. This begs the question for a flexible and adaptive governance framework that monitors, governs and enforces rules (but not too strict unless it’s about privacy) of its use. In other words, the same data set may be subject to various, clearly distinguishable governance frameworks, dictated by the use case.

________________________________________________________________________________

(1) Business Analysis for Business Intelligence, CRC Press 2012, Bert Brijs p. 272