“Data lakehousing” is all about good housekeeping your data. There is, of course, room for ungoverned data which are in a quarantine area but if you want to make use of the structured and especially the semi structured and unstructured data you’d better govern the influx of data before your data lake becomes a swamp producing no value whatsoever.

Three data flavours need three different treatments

Structured data are relatively easy to

manage: profile the data, look for referential integrity failures, outliers,

free text that may need categorising etc… In short: harmonise the data with the

target model which can be one or more unrelated tables or a set of data marts

to produce meaningful analytical data.

Semi structured data demand a data pipeline

that can combine the structured aspects of clickstream or log files analysis

with the less structured parts like search terms. It also takes care of

matching IP addresses with geolocation data since ISPs sometimes sell blocks of

IP ranges to colleagues abroad.

Unstructured data like text files from

social media, e-mails, blogposts, document and the likes need more complex

treatment. It’s all about finding structure in these data. Preparing these data

for text mining means a lot of disambiguation process steps to get from text

input to meaning output:

- Tokenization of the input is the process of splitting a text object into smaller chunks known as tokens. These tokens can be single words or word combinations, characters, numbers, symbols, or n-grams.

- Normalisation of the input: separating prefixes and/or suffixes from the morpheme to become the base form, e.g. unnatural -> nature

- Reduce certain word forms to their lemma, e.g. the infinitive of a conjugated verb

- Tag parts of speech with their grammatical function: verb, adjective,..

- Parse words as a function of their position and type

- Check for modality and negations: “could”, “should”, “must”, “maybe”, etc… express modality

- Disambiguate the sense of words: “very” can be both a positive and a negative term in combination with whatever follows

- Semantic role labelling: determine the function of the words in a sentence: is the subject an agent or the subject of an action in “I have been treated for hepatitis B”? What is the goal or the result of the action in “I sold the house to a real estate company”?

- Named entity recognition: categorising text into pre-defined categories like person names, organisation names, location names, time denominations, quantities, monetary values, titles, percentages,…

- Co-reference resolution: when two or more expressions in a sentence refer to the same object: “Bert bought the book from Alice but she warned him, he would soon get bored of the author’s style as it was a tedious way of writing.” In this sentence, “him” and “he” refer to “Bert”, “she” refers to “Alice” while “it” refers to “the author’s style”.

What architectural components support these treatments?

The first two data types can be handled

with the classical Extract, Transform and Load or Extract, Load and Transform

pipelines, in short: ETL or ELT. We refer to ample documentation about these

processes in the footnote below[1].

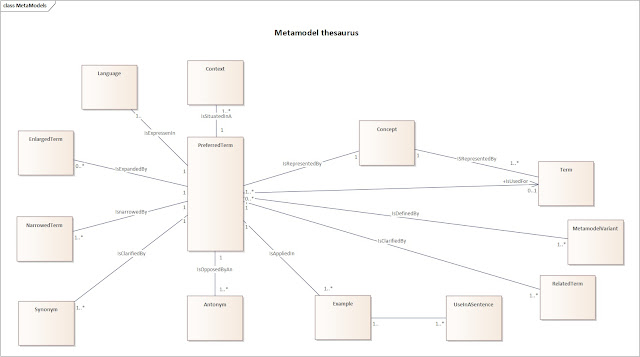

But for processing unstructured data, you

need to develop classifiers, thesauri and ontologies to represent your “knowledge

inventory” as reference model for the text analytics. This takes up a lot of

resources and careful analysis to make sure you come up with a complete, yet

practical set of tools to support named entity recognition.

The conclusion is straightforward: the less

structure predefined in your data, the more efforts in data governance are

needed.

[1] Three reliable sources, each with their nuances and perspectives on ETL/ELT:

https://aws.amazon.com/what-is/etl/

https://www.ibm.com/topics/etl

https://www.snowflake.com/guides/what-etl