There is no harm in being a bit visionary sometimes…

For the last three or four decades, the gap between online transaction processing (OLTP) and business intelligence(BI) was impossible to cross. Neither technology nor the architecture of both systems allowed integration on data level. So cumbersome and expensive technical infrastructure was needed to extract, transform and load (ETL) data into data warehouses and data marts to exploit the information assets, hidden in the records of the OLP systems.

One of the main problems was that OLTP applications were never conceived with a view on BI. For example, the first Customer Relationship Management (CRM) Applications stored the customer address as an attribute of the CUSTOMER entity instead of treating it as an entity in itself. When the first geographical information systems and geographical analytical systems came along, CRM developers remodelled the customer database and made ADDRESS a separate entity.

Imagine…

… a greenfield situation where you could develop any OLTP application from scratch and with an added BI perspective. What would it look like? What would be the guiding design principles to develop an OLTP application that plugs in seamlessly into a BI infrastructure.

Because, don’t get me wrong, there will still be a need for a separate and dedicated BI infrastructure as well as a specific BI architecture. But the ETL , the master data management (MDM) and data quality (DQ) management should no longer be a pain.

What would it take to relieve us from ETL, MDM and DQ chores?

Or how the simplest things are the hardest to realise. It would take:

- A canonical target data model and subsequently,

- A function in any OLTP application to drop off its data in that target format for the BI infrastructure to pick it up, be it via an enterprise service bus or via a bulk load procedure,

- A hub and spoke system where the master data objects are managed and replicated in both the OLTP and the BI applications,

- A uniform data quality policy, procedure and checking of accuracy, consistency, conformity and being in line with business rules of lower order (e.g. “a PARTY > PHYSICAL PERSON must have a birth date older than today”), but also of a higher order (e.g. a CUSTOMER who is under age should have a parent in the customer database whose co-signature must be on the ORDER form”)

So why is this not happening?

One explanation could be that application vendors claim they have full BI functionality built in in their solution. I remember a client with an ERP solution that contained over 300 standard analytics and reports of which the client used less than 3 %, eight to be exact. Not a great BI achievement I guess, but certainly an attempt to have 100% account control...

Another explanation could be the that we are still far away from a canonical model integrating transaction processing with BI, although data warehouse appliance vendors will tell you otherwise. Nevertheless, the dream has been kept alive for decades. I remember a data warehouse guru from the nineties who developed what he called verticals for BI to be used in telco, retail and finance, among others. They were just target models in the third normal form to set up a corporate data warehouse, so no OLTP included. He managed to sell them to a database vendor, bought a nice sailing boat (or rather a yacht) and disappeared from the BI stage. The database vendor peddled these models for a few years but in the years after 1999 I never came across these templates. And the vendor never mentioned their existence since then. Conclusion: we're still far away from a one-size-fits all transaction and analytics model.

What is the second best choice?

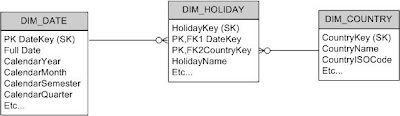

I can come up with a few ideas that will still be hard to realise. Think of an industry wide data type standard for transactions and BI purposes with the transformation rules documented. For example: a timestamp in a transaction database should always be in the form of 'YYYY-MM-DD HH:MM:SS' and two numbers are added for BI purposes: the standard day number, counting from an internationally accepted date like 1900-01-01 and the second number, which is a figure between 0 and 86.400 to represent the lowest grain of the time dimension.

The next step is to design industry dependent star schemas for basic analytics every organisation needs in that industry. There is after all, a growing body of knowledge in retail analytics, telco, finance, production, supply chain etc… If we can already achieve that, I will probably see my retirement date coming which is 2033-01-04 00:00:00.