Lingua Franca was involved in the data architecture of an organisation which name and type is of no interest for the case I am making. Namely, the way an organisation functions and is structured determines the data architecture. It is a text book example of many organisations today.

The organisation was a merger of various business units which all used their own proprietary business processes, data standards and data definitions.

The CIO had a vision of well governed, standardised processes that would create a unified organisation that operated in a predictable and transparent manner.

Harmonised End to End Processes Are the Basis of Transparent Decision Making

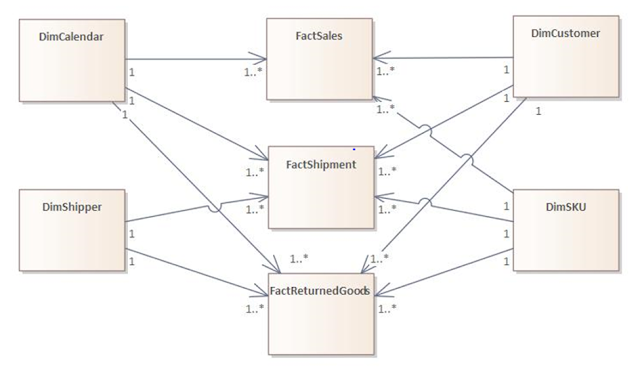

Shared facts and dimensions assure a scalable and manageable analytics architecture

The case for a Kimball approach in data

warehousing was clear: if every department, every knowledge unit would use the

same processes, the shared facts and common dimension architecture was a no

brainer.

As the diagram suggests: it takes effort to

make sure everybody is on the same page about the metrics and the dimensions

but once this is established, new iterations will go smoothly and build trust

in the data.

For more than 4 years, the resistance to

change wore the CIO, data warehouse team and finally the data architect out

when the CIO left the organisation. The new CIO decided to not continue the

fight for harmonised processes and saw this as a reduced need for a data

warehouse. If every business unit would use its own operational reporting, it

would produce rapid results at a far lower cost than a data warehouse

foundation delivering the reports. A new crew was on boarded: two ETL

developers, two front end developers and a data architect.

Satisfying Clients in Their Operational Silos Creates Technical Debt

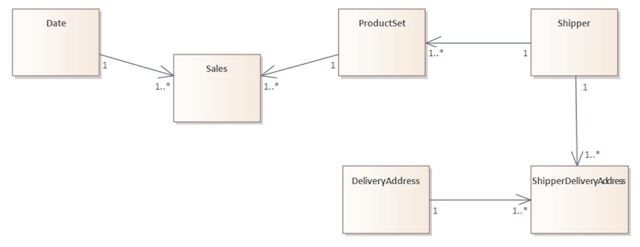

As this diagram suggests, the client

defines his particular needs, asking for a report not on SKU level because he’s

only interested in product sets. The sets require special handling so they are

linked to specific shippers who have their delivery areas. Although this schema may cause no problems

for the frontend developer to produce a nice looking report, consolidating the

information on corporate level will take time and effort.

The reality will prove differently, of

course. If every business unit uses its own definitions, metrics and dimensions

there is no chance of having correct, aggregated information for strategic decision

making. To remedy this shortcoming, the new data architect will have to go back

to 2008, publish date of Bill Inmon’s DW

2.0. The idea is to create the operational report as fast as possible and after

delivering the product refactor the underlying data to make them compatible

with other data used in previous reports.

The result is a serious governance effort,

lots of rework and an ever growing DW 2.0 in the third normal form that one day

may contain sufficient enterprise wide data to produce meaningful aggregates

for strategic direction. The Corporate Information factory (CIF) revisited so

to speak.

Why the CIF Never Realised Any Value

In Inmon’s world, it was recommended to

build the entire data warehouse before extracting any data marts. These data

marts are aggregates, based on user profiles or functions in the organisation

and are groupings of detailed data that may change over time.

This led to many problems on the sites I

have visited during my career as a business analyst and data architect.

First and foremost: by the time you have

covered the entire scope of the CIF, the world has changed and you can refactor

entire parts of the data model and

reload quite a few data to be in synch with new realities. Doing this on a 3NF

data schema can be quite complex and time and resource consuming. And then

there is the data mart management problem: if requirements for aggregations

change over time, keeping track of historical changes in aggregations and

trends is a real pain.

About DW 2.0: the Data Quagmire

To anyone who hasn’t read this book: it’s the last attempt of the “father of data warehousing” to defend his erroneous Corporate Information Factory (CIF), adding some text data to a structured data warehouse in the third normal form. The book is full of conceptual drawings but that is all they are; not one implementation direction follows up on the drawings. Compare this to the Kimball books where every architectural concept is translated into SQL scripts and clear instructions and you know where the real value is.

With DW 2.0 the organisation is trying to

salvage some of the operational reports’ value but at a cost, significantly

higher than respecting the principle “Do IT right the first time”. The only

good thing about this new approach is that nobody will notice the cost overrun

because it is spread over numerous operational reports over time. Only when the

functional data marts need rebuilding, may some people notice the data quagmire

the organisation has stepped into.

Geen opmerkingen:

Een reactie posten